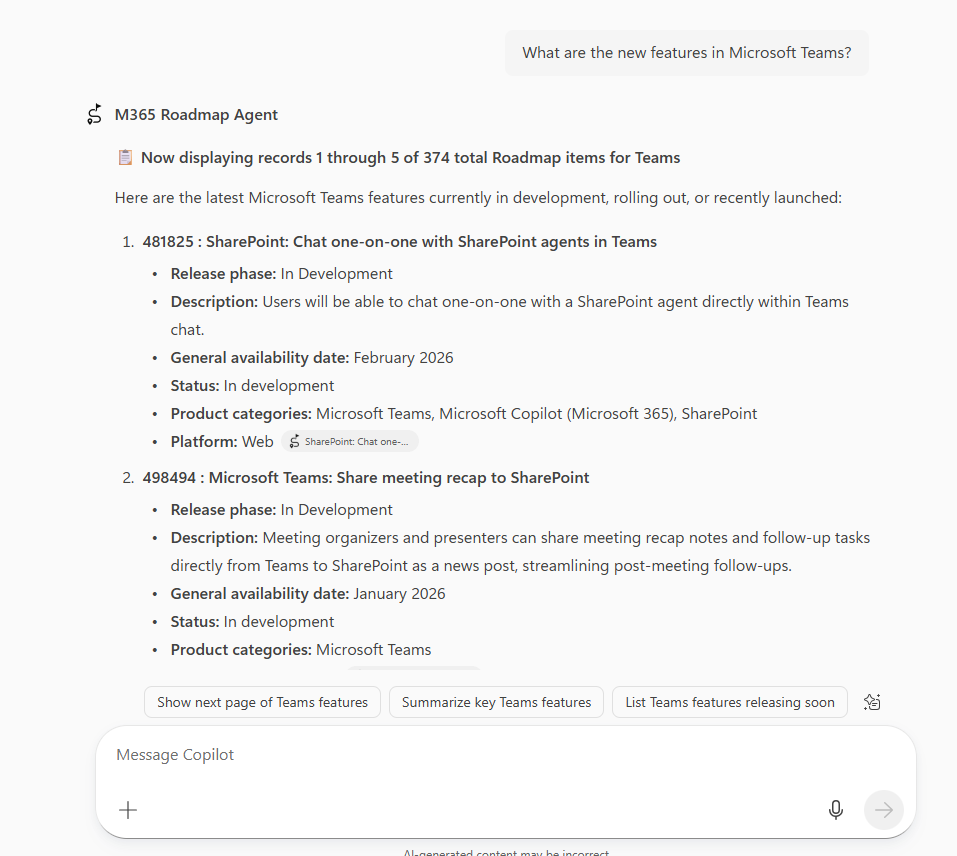

This blog post will dive into how a Copilot agent goes from this:

“What are the new features in Microsoft Teams?”

to this:https://www.microsoft.com/releasecommunications/api/v2/m365?$filter=products/any(p:+contains(tolower(p),+'teams'))&$top=0&$count=true

and output this:

Introduction

Developers have long wrestled with interpreting user input—often resorting to writing complex explicit logic to translate intent into actionable queries. This approach works, but it’s tedious, error-prone, and hard to maintain. Enter AI. Modern AI tools can automate much of this complexity, freeing developers to focus on building great experiences rather than parsing logic. Instead of manually mapping every possible input, AI can understand user intent and generate sophisticated OData queries on the fly. In this post, we’ll explore how AI transforms this workflow, using the Copilot Agent - M365 Roadmap Agent - as a case study. You’ll see how AI processes user input step by step—from parsing natural language to producing optimized queries—making intent detection smarter, faster, and more scalable.

Parsing User Input

The first step in the process is parsing the user’s input. When a user submits a query or request, the AI system analyzes the text to understand its structure and meaning. This involves breaking down the input into smaller components, such as keywords, phrases, and context. Natural Language Processing (NLP) techniques are employed to identify the intent behind the user’s request.

M365 Copilot agents utilize advanced tokenization techniques to break the input into manageable pieces. Each token is analyzed for its semantic meaning, and entities such as dates, project names, and user roles are recognized using Named Entity Recognition (NER) models. Context is maintained throughout the conversation by keeping track of previous interactions and user preferences, allowing the AI to provide more relevant responses.

In the case of the M365 Roadmap Agent, when a user inquires about specific features or updates, the AI parses the input to identify key terms related to Microsoft 365 services, feature names, and timelines. This ensures that the subsequent intent detection phase has a clear understanding of what the user is asking for.

Intent Detection

Once the input is parsed, the AI system moves on to intent detection. This stage involves determining the user’s goal or purpose in making the request. Machine learning models are trained on large datasets to recognize patterns in user behavior and language, enabling the AI to accurately classify the intent. Common intents might include searching for information, updating a record, or generating a report. The AI leverages techniques such as classification algorithms and deep learning to enhance its ability to detect intent accurately. By analyzing the context and nuances of the user’s input, the AI can differentiate between similar requests and provide precise interpretations of what the user wants to achieve.

In the case of the M365 Roadmap Agent, a common user prompt might be, “What are the new features in Microsoft Teams?” The AI recognizes the intent as a request for information about specific features, allowing it to retrieve the relevant data from the OData service.

Mapping Intent to OData Query

After identifying the user’s intent, the AI system maps this intent to an appropriate OData query. OData (Open Data Protocol) is a standardized protocol for building and consuming RESTful APIs. OData provides a uniform way to describe both the data and the data model, enabling interoperability between diverse systems. The AI leverages its understanding of the intent to construct a query that retrieves or manipulates data in accordance with the user’s request. This involves selecting the right entities, filters, and parameters to form a valid OData query.

In the M365 Roadmap Agent, when the AI detects the intent to retrieve new features in Microsoft Teams, it maps this intent to a specific OData query. The AI constructs the query by identifying the relevant entity (features), applying a filter to narrow down results to those related to Microsoft Teams, and setting parameters for pagination and counting. The resulting OData query looks like this:

https://www.microsoft.com/releasecommunications/api/v2/m365?$filter=products/any(p:+contains(tolower(p),+'teams'))

Note 1: Note how the AI removed the word ‘Microsoft’ when translating the user’s prompt to the Odata query. The AI understands that ‘Microsoft’ is not needed in the query since all items are related to Microsoft 365.

Note 2: Note how the AI uses the contains function to filter results based on the product name, ensuring that only features related to Microsoft Teams are returned.

Note 3: Note how the AI uses tolower to make the filter case insensitive, enhancing the robustness of the query.

Note 4: Note how the AI uses the $filter parameter to specify the filtering criteria.

How does the AI know how to do this mapping?

The roadmap-openapi.json file plays a crucial role in this process by providing the AI with the necessary schema and endpoint definitions. It outlines the available entities, their relationships, and the parameters that can be used in OData queries. This structured information allows the AI to accurately map user intents to valid OData queries without requiring manual intervention from developers.

The description and summary fields in the roadmap-openapi.json file provide contextual information about each API endpoint and its purpose. This helps the AI understand what each endpoint is designed to do, allowing it to select the most appropriate one based on the detected intent. For example, the summary for the features endpoint might read “Retrieve a list of new features in Microsoft 365,” which directly aligns with the user’s request. Additionally, the examples objects provide sample queries and responses that the AI can reference when constructing its own OData queries. These examples serve as templates, guiding the AI in forming correct syntax and structure for the queries it generates.

The summary field offers a concise overview of the endpoint’s functionality, making it easier for the AI to quickly grasp its purpose during the mapping process. In contrast, the description field provides a more detailed explanation, including any nuances or specific use cases that may influence how the AI constructs the query. By leveraging both fields, the AI can ensure that it accurately interprets the user’s intent and generates an appropriate OData query that aligns with the intended functionality of the API.

| |

In this example, the summary field provides a brief overview of the endpoint’s purpose, while the description field offers more detailed information about its functionality and usage. The AI can use this information to accurately map user intents to the appropriate API endpoint and construct valid OData queries.

The examples section in the OpenAPI specification is vital for delineating parameters and guiding the AI in query construction. By providing concrete examples of how to use various parameters, the AI can better understand the expected input formats and values. This helps prevent errors in query generation and ensures that the AI can produce OData queries that are both syntactically correct and semantically meaningful. For instance, if an example demonstrates how to filter results by a specific product name, the AI can replicate this structure when generating queries based on user requests.

| |

In this example, the examples section provides a clear illustration of how to filter roadmap items by title. The AI can use this example to understand how to construct similar filters when responding to user queries about specific products or features.

The value field within the examples section specifies the actual filter expression that can be used in the OData query. In this case, the value “contains(tolower(title), ’teams’)” indicates that the AI should construct a filter to find roadmap items where the title contains the word “teams,” regardless of case. This direct example helps the AI understand how to format similar filter expressions when generating queries based on user input.

Clearly differentiating between the summary and description fields is crucial for the AI’s understanding of the API’s functionality. The summary allows for quick identification of the endpoint’s purpose, which is essential for efficient intent mapping. Meanwhile, the description provides the depth needed to understand any specific requirements or constraints associated with the endpoint. This distinction ensures that the AI can generate accurate and contextually appropriate OData queries that align with both the user’s intent and the API’s capabilities.

It is also important that the values of these fields are differentiated from one endpoint to another to avoid confusion during the mapping process. If multiple endpoints had similar summaries or descriptions, the AI might struggle to determine which endpoint best matches the user’s intent. Clear and distinct values help the AI to accurately associate user requests with the correct API functionality, leading to more precise OData query generation and ultimately a better user experience.

How is the OpenAPI file generated?

The OpenAPI file for the M365 Roadmap API is generated by analyzing the API’s output and endpoints, which are based on the OData protocol. Since OData is a standardized way to query and update data, it provides a clear structure that can be translated into an OpenAPI specification. GitHub Copilot plays a crucial role in this process by suggesting the appropriate structure and content for the OpenAPI file based on the characteristics of the API and sample outputs. By leveraging Copilot’s capabilities, developers can efficiently create a comprehensive OpenAPI specification that accurately represents the M365 Roadmap API’s functionality and endpoints.

Here is a sample prompt that can be used with GitHub Copilot to generate an OpenAPI specification from JSON output of the M365 Roadmap API:

Given the following JSON output from the M365 Roadmap V2 API https://www.microsoft.com/releasecommunications/api/v2/m365, generate an OpenAPI specification that describes the available endpoints, parameters, and responses. The API uses OData protocol for querying and updating data. Please include the necessary components such as paths, operations, parameters, and response schemas.

| |

Here is the resulting OpenAPI specification generated by GitHub Copilot: roadmap-openapi-yml-sample

Generating Sophisticated Queries

One of the key advantages of using AI for intent detection and query mapping is the ability to generate sophisticated queries that go beyond simple CRUD (Create, Read, Update, Delete) operations. The AI can analyze the context of the user’s request and incorporate additional logic to create more complex queries. For example, if a user asks for a report on sales data for a specific time period, the AI can automatically include date filters, aggregation functions, and other relevant parameters in the OData query.

For example, the user might ask “Are there any roadmap items that mention agent in the description are shipping in the next 90 days and mention Purview or Copilot in the product field?” The AI can break down this request into multiple components: filtering by description, map the ‘shipping’ constraint to the availabilities field, and applying an OR condition for the product field. The resulting OData query might look like this:https://www.microsoft.com/releasecommunications/api/v2/m365?$filter=contains(tolower(description), 'agent') and startsWith(shipping, '2023-') and (contains(tolower(product), 'purview') or contains(tolower(product), 'copilot'))](https://www.microsoft.com/releasecommunications/api/v2/m365?filter=contains(tolower(description),+'agent')+and+(products/any(p:+contains(tolower(p),+'purview'))+or+products/any(p:+contains(tolower(p),+'copilot')))+and+(availabilities/any(a:+a/year+eq+2025+and+(a/month+eq+'November'+or+a/month+eq+'December'))+or+availabilities/any(a:+a/year+eq+2026+and+a/month+eq+'January'))&orderby=modified+desc)

This level of sophistication allows users to make more nuanced requests without needing to understand the underlying query language. The AI handles the complexity, ensuring that users receive accurate and relevant results based on their specific needs.

Reducing Developer Workload

By automating the process of intent detection and OData query mapping, AI significantly reduces the workload for developers. Instead of spending hours writing and debugging complex logic, developers can rely on AI to handle these tasks efficiently. This not only speeds up the development process but also minimizes the risk of errors that can arise from manual coding. Developers can now focus on designing better user experiences and delivering value through their applications.

Conclusion

The integration of AI into the development process for intent detection and OData query mapping represents a significant advancement in software development. By automating the mundane and error-prone aspects of coding, AI empowers developers to concentrate on what truly matters: achieving their desired outcomes and creating innovative solutions. As AI continues to evolve, we can expect even more sophisticated capabilities that will further enhance the development experience.